Organized by the Technology Innovation Institute (TII)

Retrieval Augmented Generation (RAG) has emerged as a key technology to mitigate the issues that Large Language Models (LLMs) face when they lack adequate knowledge. Given a user’s request, a RAG system searches auxiliary sources to augment the prompt associated with the request with relevant content. RAG is attracting a great deal of attention from the AI community, yet it is still hard to assess the quality of RAG systems in a systematic manner.

The goal of the LiveRAG Challenge is to allow research teams across academia and industry to advance their RAG research and compare the performance of their solutions with other teams, on a fixed corpus (derived from the publicly available FineWeb) and a fixed open-source LLM, Falcon3-10B-Instruct.

The LiveRAG challenge requires an application process, after which selected teams will be

During the Live Challenge Day, the teams will be provided with a stream of unseen questions and will have to return their answers under strict response-time constraints.

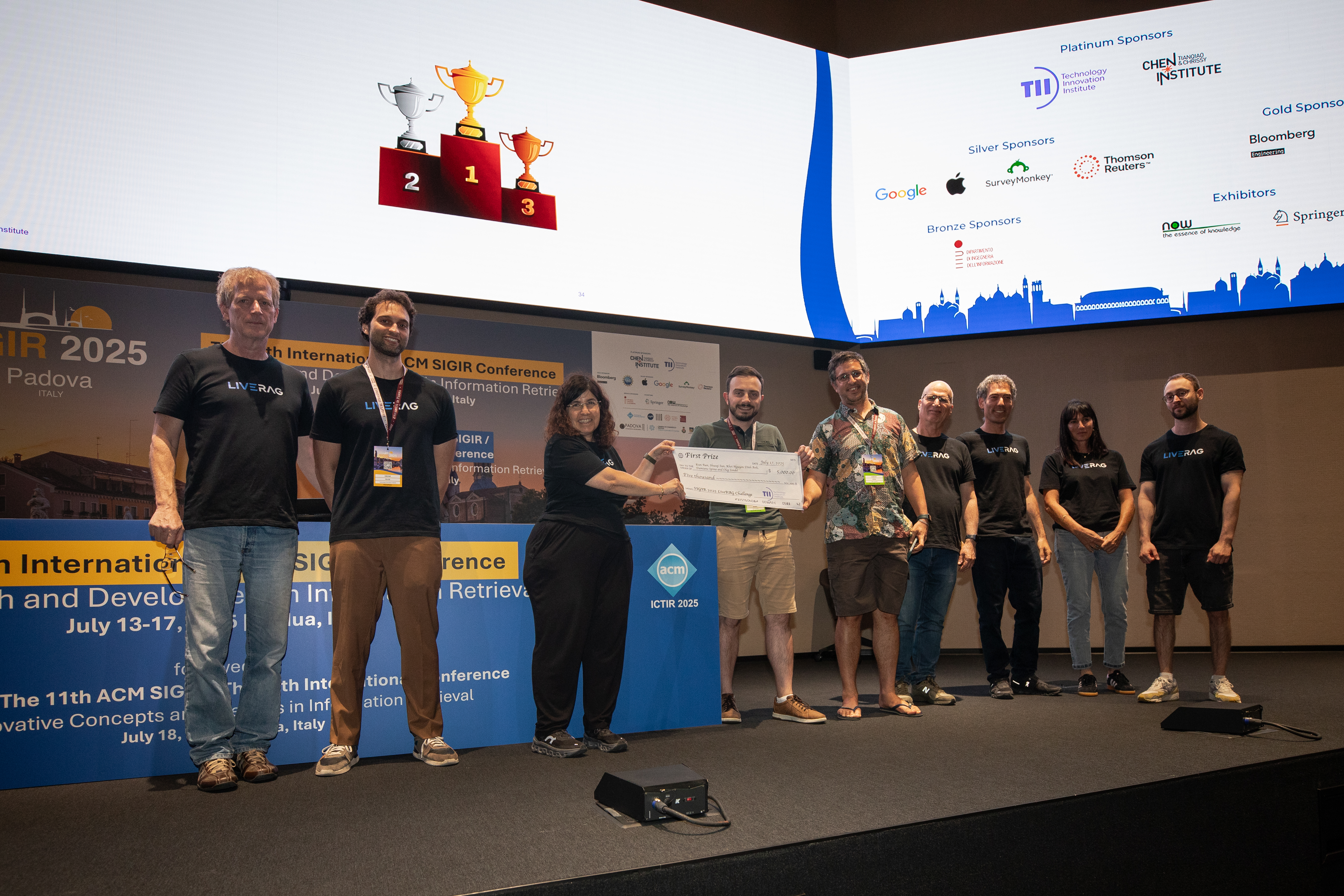

Finalists will be requested to present their results at the LiveRAG workshop day to be held at the SIGIR'2025 conference, during which winners will be announced and prizes will be awarded.

Retrieval Augmented Generation (RAG) has emerged as a key technology to mitigate the issues that Large Language Models (LLMs) face when they lack adequate knowledge. Yet it is still hard to assess the quality of RAG systems in a systematic manner. The goal of the SIGIR’2005 LiveRAG Challenge, which took place over March-May 2025 was to allow research teams across academia and industry to advance their RAG research and compare the performance of their solutions with other teams, on a fixed corpus (derived from the publicly available FineWeb ) and a fixed open-source LLM, Falcon3-10B-Instruct.

After an application process, 40 selected teams were awarded up to 1500 USD in AWS compute credits to train their RAG solution, and up to 750 USD in Pinecone compute credits to use/generate their RAG indices. They were also given early access to TII’s DataMorgana tool to help them generate synthetic benchmarks for training and testing.

| First Place | RMIT-ADMS Kun Ran, Shuoqi Sun, Khoi Nguyen Dinh Anh, Damiano Spina, Oleg Zendel |

| Second Place | Magikarp Tong Zhou |

| Third Place | RAGtifier William Xion, Hailay Teklehaymanot, Oleh Astappiev, Tim Cofala UDInfo Damian Martinez, Catalina Riano, Hui Fang |

During the Live Challenge Day, on May 12, 2025, the teams were provided with a stream of unseen questions. Twenty-five teams returned valid answers under a two-hour time limit. We are delighted to list on the left side (sorted by team's name alphabetical order) the finalists of the SIGIR'2025 LiveRAG Challenge.

The finalists were identified after a thorough validation and assessment of the teams' answers and artifacts. This included the Correctness and Faithfulness scores, as computed by DataMorgana, following the official evaluation guidelines, manual examination of results by annotators, PC members’ reviews of the teams' reports, and code repositories’ inspection.

The prize winners will be announced during SIGIR’2025 at the LiveRAG Workshop in Padua, Italy on July 17, 2025.

| Team Name | Team members | Institution |

|---|---|---|

| Magikarp | Tong Zhou | Institute of Automation Chinese Academy of Sciences, China |

| RAGtifier | William Xion, Hailay Teklehaymanot, Oleh Astappiev, Tim Cofala | L3S Research Center, Leibniz University Hannover, Germany |

| RMIT-ADMS | Oleg Zendel, Kun Ran, Shuoqi Sun, Dinh Anh Khoi Nguyen, Damiano Spina | RMIT, Australia |

| UDInfo | Damian Martinez, Catalina Riano, Hui Fang | University of Delaware, USA |

| Date (2025) | Details |

|---|---|

| Mar 3 Feb 24 | Application submission deadline SIGIR 2025 Easychair site (Select: SIGIR 2025 LiveRAG Challenge track) |

| Mar 12 |

|

| Mar 20 Mar 15 | Training and testing tool (DataMorgana) made available to participants |

| May 5 May 8 | “Dry” test for participants of live service on a small question set |

| May 12 | Live Challenge Day hosted on Hugging Face competition platform – test questions shared and live service for answers submission opens |

| May 23 May 19 | Short paper submission deadline |

| June 12 May 29 | Short paper notification and announcement of finalists |

| July 17 |

|

Note

Note

Each team must agree to the Challenge privacy policy and terms of entry as specified in the Challenge’s Terms and Conditions. They should in addition strictly follow the Challenges Guidelines.

Selected teams are expected to build a RAG solution over FineWeb-10BT (a 15M documents subset of FineWeb) and integrate it with the Challenge LLM (Falcon3-10B-Instruct) for answer generation. Selected participants have the choice between building their own search indices over the Challenge dataset or taking advantage (leveraging their allocated credits) of two prebuilt indices, a Pinecone Dense index and an Opensearch Sparse index.

Participants will get early access to TII’s DataMorgana, a synthetic and configurable benchmark generator for training and testing their system prior to the live event. DataMorgana is a new tool (See arXiv paper for more details) that allows RAG developers to generate synthetic questions and answers from a given corpus via configuration instructions. They will be able to specify the type of questions they are expecting, as well as the type of users who would express them, so as to ensure benchmark diversity. The same DataMorgana tool will be used for generating an original test set at the live event and for automatic evaluation afterwards.

The Evaluation process will consider two metrics, Relevance and Faithfulness (see Challenge Details for more information), and be conducted in two stages:

Submitted code, prompts, and artifacts will undergo a detailed review to verify adherence to challenge rules.

Comprehensive details can be found in the Challenge Details, while operational instructions will be shared directly with selected participants. Prepare to showcase your skills and push the boundaries of RAG system capabilities!

If no academic team ranks among the top three, the first-ranking academic team in the top ten participants will be considered for the third prize.

Application

Submission Deadline

Application Submission

Notifications and Opening

of Resources

Live Challenge

Day

Short Paper Submission Deadline

May 19, 2025Short Paper

Notifications

SIGIR LiveRAG

workshop